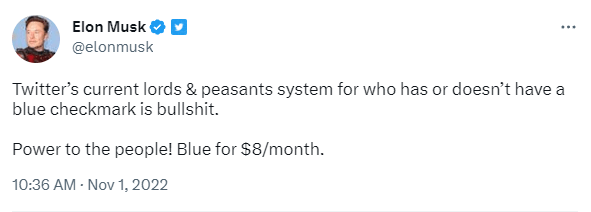

Let’s start this post out by noting that a key reason Elon Musk said he was getting rid of the legacy Twitter verification system was that it was arbitrary and unfair and created a “lords and peasants” scenario. Keep that in mind, because you’re going to want to remember that by the end of this article.

Anyway… what a weird few days on Twitter. Late last week, Twitter finally got around to doing the thing Elon Musk had promised would happen at several earlier dates (including a firm deadline promised at the beginning of April): remove the “blue checkmarks” from those who were legacy verified accounts.

As we’ve detailed over and over again, Elon’s nonsensical decision to combine Twitter Blue and Twitter “verification” (loosely speaking) never made any sense. It misunderstands the point of verification as well as undermines the value of Twitter Blue. It’s kind of self-defeating, and basically most people recognize this. It’s why he’s struggled to get most people to sign up for it.

As part of the great removal, Musk seemed to think that maybe it would push those legacy verified accounts to pay up. That, well, didn’t happen. Travis Brown, a researcher who’s been the most thorough in tracking all of this and had created a database of the over 400,000 legacy verified accounts, noted that as the blue checks were removed… a net total of 28 new Twitter Blue accounts were created.

28.

Yes. Just 28. That’s net total, meaning that somewhat more than 28 people who had been legacy verified signed up, but a bunch of people also canceled their Twitter Blue account, so when you net it out, it was plus 28. Which is… nothing.

Again, as we’ve pointed out repeatedly, there are all sorts of things that Elon Musk could have done to make Twitter Blue worth subscribing to. But, instead, he focused on the “blue check” as if that was valuable. It was never valuable. And by changing it from a verified to a “this mfer paid for Elon’s Twitter” badge, he actually devalued it massively.

Either way, this resulted in a pretty stupid Twitter war, in which some people started pushing a kinda silly “Block the Blue” campaign, urging Twitter users to block anyone with the blue checkmark. And then Elon supporters with the blue checkmark went on an equally silly campaign to yell at people for not giving a billionaire $8. Frankly, neither side looked particularly good in all of this.

But, into that mix came chaos in the form of (of course) Elon Musk.

First, a few people noticed that a few celebrities who had very, very publicly stated that they would not pay for Twitter Blue were showing up as having paid “and verified” by their phone number. This included LeBron James and Stephen King among others. Elon admitted in a response to King that he had gifted him a Twitter Blue account:

Musk separately admitted that he was “paying for a few personally” to give them a Twitter Blue account, though he later said it was just LeBron James, Stephen King, and William Shatner — all three of whom had very publicly stated they had no interest in paying.

This made me wonder if he was opening himself up to yet another lawsuit. Given how much Elon has basically turned paying for Twitter Blue into an “endorsement” of the new Twitter and Elon himself, putting that label on the accounts of people who have not paid for it and don’t seem to want it could violate a number of laws, including the Lanham Act’s prohibition on false endorsement as well as a variety of publicity rights claims.

In general, I’m not a huge fan of publicity rights claims, because they are commonly used by the rich and powerful to silence speech. The original point of publicity rights laws was to stop false endorsement claims, whereby a commercial entity was using the implied association of a famous person to act as a promotion or endorsement of the commercial entity or its products.

Which, uh, seems to be exactly what Musk was doing in paying for Blue for some celebrities.

Separately, there are a bunch of questions about how this might violate EU data protection laws, but we’ll wait and see how the EU handles that.

Of course, then things got stupider. Remember the deal with Elon Musk: it can always get stupider.

As that “Block the Blue” campaign started getting more attention (and even started trending on Twitter), Musk gave the ringleaders of the campaign Twitter Blue. Yes, you read that right. Musk gave the people who were telling everyone to block anyone with a blue checkmark… a blue checkmark. And then admitted it by joking about how he was a troll.

Of course… as Twitter user Mobute noted, this is Musk admitting that the trolliest insult he can think of is to say someone is associated with him and his company:

Seems… kinda… like a self own?

Anyway, a few hours later, people started noticing that a ton of other accounts of famous people also started showing up with Twitter Blue despite not paying for it, nor wanting it. Some people said that it was being given to anyone with over 1 million followers, though there were some other accounts with fewer that got it and said they didn’t want it, like Terry Pratchett.

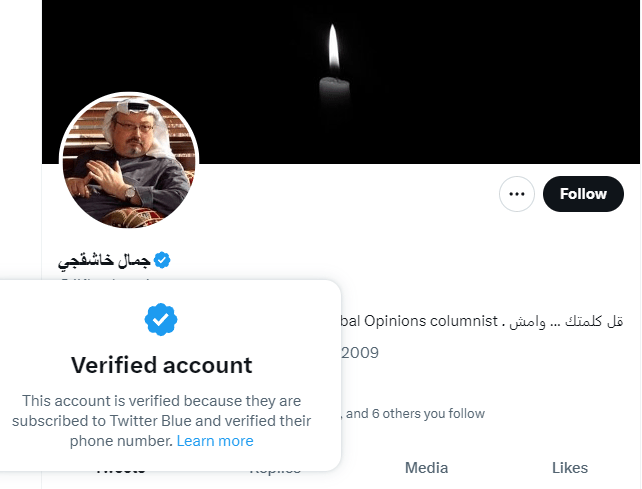

Still, it seemed that most accounts suddenly getting Blue without paying, requesting, or verifying their phone numbers had a million or more followers. And this included a large number of dead celebrities, where Twitter claims (if you click on their blue checkmark) that they paid and verified their phone number, which is pretty hard to do when you’re dead. There were lots of people who fell into this camp, including Chadwick Boseman, Norm MacDonald, Michael Jackson, and the aforementioned Terry Pratchett.

Oh, and Jamal Khashoggi, who was somewhat famously murdered by the Saudi government. I get the feeling he did not, in fact, confirm his phone number.

There have been questions about the publicity rights of dead people, and I’m a strong believer in the idea that publicity rights go away after death. But, you know, it’s still a bad look.

The end result though, is bizarre. Even as Musk is claiming that “everyone has to pay the same” that’s clearly no longer true:

He’s literally handing them out for free (1) based on levels of fame via follower counts (2) if he just decides to arbitrarily or (3) to troll people.

Which, you know, his site, he can do whatever the fuck he wants, but remember what I said up top? His entire reason for going down this path was to get rid of the arbitrary check mark system that created a “lords and peasants” setup where people deemed notable by Twitter got a check mark, and he was bring back “power to the people.”

But… as seems to keep happening, Elon Musk brings back a system he decried as stupid, but brings it back in a much stupider, and much worse, manner. So now there’s still an arbitrary lords and peasants system, but rather than one where at least someone is trying to determine if a person is notable and needs to be verified, it’s now an arbitrary cut-off on followers, or if Elon thinks it’s funny. Which seems way more arbitrary and stupid than the old system.